When downloading software to my database server, I used to first download locally and later copy to my Unix box… but wouldn’t be convenient to download it directly on the database server?

Quite often, you get no X and no Browser and no Internet access on your datacenter. Therefore, we’ll use wget to the purpose. CURL is a similar tool that does the trick as well. WGET also exists for Windows by the way.

First, you need WGET

sudo yum install wget

Then, you need Internet

Ask your network colleagues for a proxy and request access to the following domains

- edelivery.oracle.com

- aru-akam.oracle.com

- ccr.oracle.com

- login.oracle.com

- support.oracle.com

- updates.oracle.com

- oauth-e.oracle.com

- download.oracle.com

- edelivery.oracle.com

- epd-akam-intl.oracle.com

Some of those are documented on Registering the Proxy Details for My Oracle Support but I extended the list for software download (e.g. SQL Developer)

Now, configure your .wgetrc

https_proxy = proxy.example.com:8080

proxy_user = oracle

proxy_passwd = ***

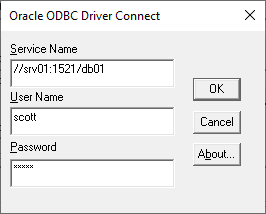

http_user = laurent.schneider@example.com

http_password = ***

The https proxy is your network proxy to access oracle.com from your database server. The proxy user and password may be required on your company proxy. The http user and password are your oracle.com (otn/metalink) credentials.

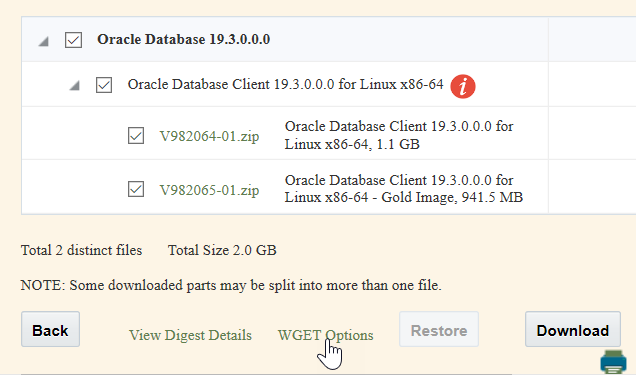

Later, to figure out the URL, either use the WGET script Oracle sometimes provides

or try to copy the link in your browser, e.g.

https://download.oracle.com/otn/java/sqldeveloper/sqldeveloper-20.2.0.175.1842-20.2.0-175.1842.noarch.rpm

At this point, it probably won’t work

$ wget --no-check-certificate "https://download.oracle.com/otn/java/sqldeveloper/sqldeveloper-20.2.0.175.1842-20.2.0-175.1842.noarch.rpm"

strings

$ htmltree sqldeveloper-20.2.0.175.1842-20.2.0-175.1842.noarch.rpm

==============================================================================

Parsing sqldeveloper-20.2.0.175.1842-20.2.0-175.1842.noarch.rpm...

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

<html> @0

<head> @0.0

<script language="javascript" type="text/javascript"> @0.0.0

"\x0afunction submitForm()\x0a{\x0avar hash = location.hash;\x0aif (hash) {\x0aif..."

<base target="_self" /> @0.0.1

<body onload="submitForm()"> @0.1

<noscript> @0.1.0

<p> @0.1.0.0

"JavaScript is required. Enable JavaScript to use OAM Server."

<form action="https://login.oracle.com/mysso/signon.jsp" method="post" name="myForm"> @0.1.1

We haven’t login.

Let’s get the login cookie

wget --no-check-certificate --save-cookies=mycookie.txt --keep-session-cookies https://edelivery.oracle.com/osdc/cliauth

Your mycookie.txt file should now contains login.oracle.com credentials.

Depending on the piece of software, e.g. sql developer, the authparam must be passed in. The authparam can be seen once you start the download, e.g. in your Downloads list (CTRL-J). When you use the wget script, when available, it probably provides a token= instead of an authparam=. The authparam typically validates you agreed to the license and possibly expires after 30 minutes. But maybe you can read the cookie and figure out how to pass in how to accept the license without Authparam. I haven’t gone that far yet.

wget --load-cookies=mycookie.txt --no-check-certificate "https://download.oracle.com/otn/java/sqldeveloper/sqldeveloper-20.2.0.175.1842-20.2.0-175.1842.noarch.rpm?AuthParam=1111111111_ffffffffffffffffffffffffffffffff"

A long post for a short mission, downloading a file…

I remind you that using no-check-certificate and clear text passwords in .wgetrc isn’t a good security practice

), I found out changing the mode to noarchivelog didn’t implicitely deactivate the standby protection mode.

), I found out changing the mode to noarchivelog didn’t implicitely deactivate the standby protection mode.