I had fun answering a question about random on the technical forums.

What is in your opinion the boolean value of DBMS_RANDOM.VALUE=DBMS_RANDOM.VALUE?

Or, how many rows would

select * from dual where dbms_random.value=dbms_random.value;

return?

It is wrong to assume the function will be evaluated twice.

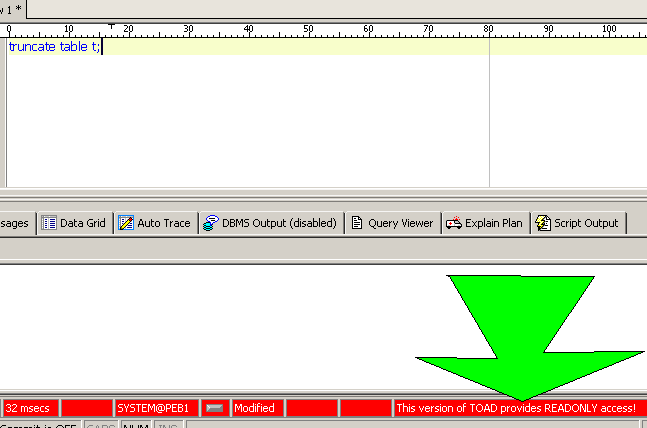

The short answer would be : do not rely on random plsql functions in SQL…

here is a test case in 11.2.0.2 and 10.2.0.3

SQL> select version from v$instance;

VERSION

-----------------

10.2.0.3.0

SQL> select * from dual where dbms_random.value=dbms_random.value;

no rows selected

Execution Plan

----------------------------------------------------------

Plan hash value: 1224005312

---------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

---------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | 2 | 2 (0)| 00:00:01 |

|* 1 | FILTER | | | | | |

| 2 | TABLE ACCESS FULL| DUAL | 1 | 2 | 2 (0)| 00:00:01 |

---------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - filter("DBMS_RANDOM"."VALUE"()="DBMS_RANDOM"."VALUE"())

In 10g, the function is executed twice per row, and the chance to have two different values is more than 99.9999…%.

SQL> select version from v$instance;

VERSION

-----------------

11.2.0.2.0

SQL> select * from dual where dbms_random.value=dbms_random.value

D

-

X

Execution Plan

----------------------------------------------------------

Plan hash value: 1224005312

---------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

---------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | 2 | 2 (0)| 00:00:01 |

|* 1 | FILTER | | | | | |

| 2 | TABLE ACCESS FULL| DUAL | 1 | 2 | 2 (0)| 00:00:01 |

---------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - filter("DBMS_RANDOM"."VALUE"() IS NOT NULL)

Here the optimized execute the function only once per row, and since the result is never null, it always evaluates to true.

Is this a bug or a feature?

In my opinion it is a confusing tuning enhancement that may break badsome programs.

In this thread, I mentioned that prior dbms_random.value is not null is an unsafe construct.